Understanding Schedules of Reinforcement and Choice Behavior

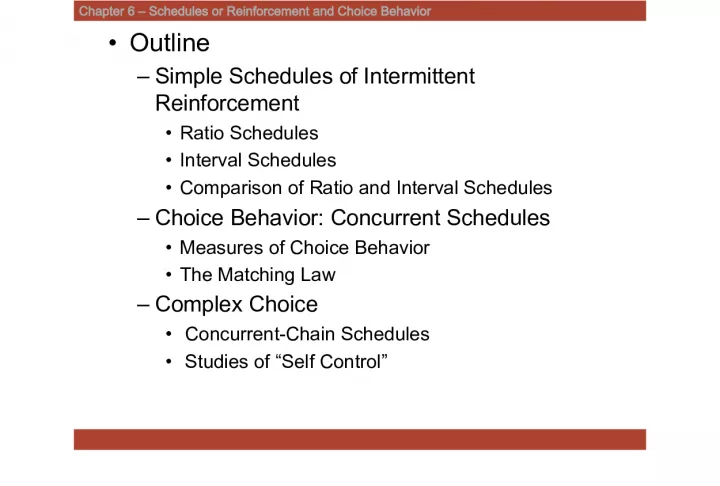

This chapter covers the basics of schedules of reinforcement, including simple ratio and interval schedules, concurrent schedules, and complex choice behavior. It also explores measures of choice behavior and studies of self-control.

- Uploaded on | 7 Views

-

marcel

marcel

About Understanding Schedules of Reinforcement and Choice Behavior

PowerPoint presentation about 'Understanding Schedules of Reinforcement and Choice Behavior'. This presentation describes the topic on This chapter covers the basics of schedules of reinforcement, including simple ratio and interval schedules, concurrent schedules, and complex choice behavior. It also explores measures of choice behavior and studies of self-control.. The key topics included in this slideshow are schedules of reinforcement, choice behavior, intermittent reinforcement, ratio schedules, interval schedules,. Download this presentation absolutely free.

Presentation Transcript

1. Chapter 6 Schedules or Reinforcement and Choice Behavior Outline Simple Schedules of Intermittent Reinforcement Ratio Schedules Interval Schedules Comparison of Ratio and Interval Schedules Choice Behavior: Concurrent Schedules Measures of Choice Behavior The Matching Law Complex Choice Concurrent-Chain Schedules Studies of Self Control

2. Simple Schedules of Intermittent Reinforcement Ratio Schedules RF depends only on the number of responses performed Continuous reinforcement (CRF) each response is reinforced barpress = food key peck = food CRF is rare outside the lab. Partial or intermittent RF

3. Partial or intermittent Schedules of Reinforcement FR (Fixed Ratio) fixed number of operants (responses) CRF is FR1 FR 10 = every 10 th response RF originally recorded using a cumulative record Now computers can be graphed similarly

5. The cumulative record represents responding as a function of time the slope of the line represents rate of responding. Steeper = faster

6. Responding on FR scheds. Faster responding = sooner RF So responding tends to be pretty rapid Postreinforcement pause Postreinforcement pause is directly related to FR. Small FR = shorter pauses FR 5 large FR = longer pauses FR 100 wait a while before they start working. Domjan points out this may have more to do with the upcoming work than the recent RF Pre-ratio pause?

9. how would you respond if you received $1 on an FR 5 schedule? FR 500? Post RF pauses? RF history explanation of post RF pause Contiguity of 1 st response and RF FR 5 1 st response close to RF only 4 more FR 100 1 st response long way from RF 99 more

10. VR (Variable ratio schedules) Number of responses still critical Varies from trial to trial VR 10 reinforced on average for every 10 th response. sometimes only 1 or 2 responses are required other times 15 or 19 responses are required.

11. Example (# = response requirement) VR10 FR10 19 RF 10 RF 2 RF 10 RF 8 RF 10 RF 18 RF 10 RF 5 RF 10 RF 15 RF 10 RF 12 RF 10 RF 1 RF 10 RF VR 10 (19+2+8+18+5+15+12+1)/8 = 10

12. VR = very little postreinforcement pause why would this be? Slot machines very lean schedule of RF But - next lever pull could result in a payoff.

13. FI (Fixed Interval Schedule) 1 st response after a given time period has elapsed is reinforced. FI 10s 1 st response after 10s RF . RF waits for animal to respond responses prior to 10-s not RF. scalloped responding patterns FI scallop

16. Similarity of FI scallop and post RF pause? FI 10s? FI 120s? The FI scallop has been used to assess animals ability to time.

17. VI (variable interval schedule) Time is still the important variable However, time elapse requirement varies around a set average VI 120s time to RF can vary from a few seconds to a few minutes $1 on a VI 10 minute schedule for button presses? Could be RF in seconds Could be 20 minutes post reinforcement pause?

18. Produces stable responding at a constant rate peck..peck..peck..peck..peck sampling whether enough time has passed The rate on a VI schedule is not as fast as on an FR and VR schedule why? ratio schedules are based on response. faster responding gets you to the response requirement quicker, regardless of what it is? On a VI schedule # of responses dont matter, steady even pace makes sense.

19. Interval Schedules and Limited Hold Limited hold restriction Must respond within a certain amount of time of RF setup Like lunch at school Too late you miss it

20. Comparison of Ratio and Interval Schedules What if you hold RF constant Rat 1 = VR Rat 2 = Yoked control rat on VI RF is set up when Rat 1 gets to his RF If Rat 1 responds faster, RF will set up sooner for Rat2 If Rat 1 is slower, RF will be delayed

21. Comparison of Ratio and Interval Schedules

22. Why is responding faster on ratio scheds? Molecular view Based on moment x moment RF Inter-response times (IRTs) R1R2 RF Reinforces long IRT R1..R2 RF Reinforces short IRT More likely to be RF for short IRTs on VR than VI

23. Molar view Feedback functions Average RF rate during the session is the result of average response rates How can the animal increase reinforcement in the long run (across whole session)? Ratio - Respond faster = more RF for that day FR 30 Responding 1 per second RF at 30s Respond 2 per second RF at 15s

24. Molar view continued Interval - No real benefit to responding faster FI 30 Responding 1 per second RF at 30 or 31 (30.5) What if 2 per second 30 or 30.5 (30.25) Pay Salary? Clients?

25. Choice Behavior: Concurrent schedules The responding that we have discussed so far has involved schedules where there is only one thing to do. In real life we tend to have choices among various activities Concurrent schedules examines how an animal allocates its responding among two schedules of reinforcement? The animals are free to switch back and forth

27. Measures of choice behavior Relative rate of responding for left key B L . (B L + B R ) B L = Behavior on left B R = Behavior on right We are just dividing left key responding by total responding.

28. This computation is very similar to the computation for the suppression ratio. If the animals are responding equally to each key what should our ratio be? 20 . = .50 20+20 If they respond more to the left key? 40 . = .67 40+20 If they respond more to the right key? 20 . = .33 20+40

29. Relative rate of responding for right key Will be reciprocal of left key responding, but also can be calculated with the same formula B R . (B R + B L ) Concurrent schedules? If VI 60 VI 60 The relative rate of responding for either key will be .5 Split responding equally among the two keys

30. What about the relative rate of reinforcement? Left key? Simply divide the rate of reinforcement on the left key by total reinforcement. r L . (r L + r R ) VI 60 VI 60? If animals are dividing responding equally? .50 again

31. The Matching Law relative rate of responding matches relative rate of RF when the same VI schedule is used .50 and .50 What if different schedules of RF are used on each key?

32. Left key = VI 6 min (10 per hour) Right key = VI 2 min (30 per hour) Left key relative rate of responding B L . = r L . 10 =.25 left (B L + B R ) (r L + r R ) 40 Right key? simply the reciprocal .75 Can be calculated though B R . = r R . 30 =.75 right (B R + B L ) (r R + r L ) 40 Thus - three times as much responding on right key .25x3 = .75

33. Matching Law continued: Simpler computation. B L . = r L . B R r R 10 30 again three times as much responding on right key

34. Herrnstein (1961) compared various VI schedules Matching Law. Figure 6.5 in your book

36. Application of the matching law The matching law indicates that we match our behaviors to the available RF in the environment. Law,Bulow, and Meller (1998) Predicted adolescent girls that live in RF barren environments would be more likely to engage in sexual behaviors Girls that have a greater array of RF opportunities should allocate their behaviors toward those other activities Surveyed girls about the activities they found rewarding and their sexual activity The matching law did a pretty good job of predicting sexual activity Many kids today have a lot of RF opportunities. May make it more difficult to motivate behaviors you want them to do Like homework X-box Texting friends TV

37. Complex Choice Many of the choices we make require us to live with those choices We cant always just switch back and forth Go to college? Get a full-time job? Sometimes the short-term and long-term consequences (RF) of those choices are very different Go to college Poor now; make more later Get a full-time job Money now; less earning in the long run

38. Concurrent-Chain Schedules Allows us to examine these complex choice behaviors in the lab Example Do animals prefer a VR or a FR? Variety is the spice of life?

40. Choice of A 10 minutes on VR 10 Choice of B 10 minutes on FR 10 Subjects prefer the VR10 over the FR10 How do we know? Subjects will even prefer VR schedules that require somewhat more responding than the FR Why do you think that happens?

41. Studies of Self control Often a matter of delaying immediate gratification (RF) in order to obtain a greater reward (RF) later. Study or go to party? Work in summer to pay for school or enjoy the time off?

42. Self control in pigeons? Rachlin and Green (1972) Choice A = immediate small reward Coice B = 4s Delay large reward Direct choice procedure Pigeons choose immediate, small reward Concurrent-chain procedure Could learn to choose the larger reward Only if a long enough delay between initial choice and the next link.

44. This idea that imposing a delay between a choice and the eventual outcomes helps organisms make better (higher RF) outcomes works for people to. Value-discounting function V = M . (1+KD) V-value of RF M- magnitude of RF D delay of reward K is a correction factor for how much the animal is influenced by the delay All this equation is saying is that the value of a reward is inversely affected by how long you have to wait to receive it. IF there is no delay D=0 Then it is simply magnitude over 1

45. If I offer you $50 now or $100 now? 50 . = 50 100 . = 100 (1+1x0) (1+1x0) $50 now or $100 next year? 50 . = 50 100 . = 7.7 (1+1x0) (1+1x12)

47. As noted above K is a factor that allows us to correct these delay functions for individual differences in delay-discounting People with steep delay discounting functions will have a more difficult time delaying immediate gratification to meet long-term goals Young children Drug abusers Madden, Petry,Badger, and Bickel (1997) Two Groups Heroin-dependent patients Controls Offered hypothetical choices $ smaller now $ more later Amounts varied $1,000, $990, $960, $920, $850, $800, $750, $700, $650, $600, $550, $500, $450, $400, $350, $300,$250, $200, $150, $100, $80, $60, $40, $20, $10, $5, and $1 Delays varied 1 week, 2 weeks, 2 months, 6 months, 1 year, 5 years, and 25 years.

50. It has been described mathematically in the following way (Baum, 1974) R A = b r A a R B r B R A and R B refer to rates of responding on keys A and B (i.e. left and right) r A and r B refer to the rates of reinforcement on those keys When the value of exponent a is equal to 1.0 a simple matching relationship occurs where the ratio of responses perfectly match the ratio of reinforcers obtained. The variable b is used to adjust for response effort differences between A an B when they are unequal, or if the reinforcers for A and B were unequal.