Development of a 3D Tight Binding Electronic Structure Simulator for Multi-Million Atom Systems

This research focuses on the development of NEMO 3D, a tight binding based electronic structure simulator capable of handling multi-million atom systems. The project was carried out by Gerhard Klimeck and his team at the Applied Cluster Computing Technologies Group at the Jet Propulsion Laboratory, California Institute of Technology, under contract with NASA.

- Uploaded on | 0 Views

-

kiaa

kiaa

About Development of a 3D Tight Binding Electronic Structure Simulator for Multi-Million Atom Systems

PowerPoint presentation about 'Development of a 3D Tight Binding Electronic Structure Simulator for Multi-Million Atom Systems'. This presentation describes the topic on This research focuses on the development of NEMO 3D, a tight binding based electronic structure simulator capable of handling multi-million atom systems. The project was carried out by Gerhard Klimeck and his team at the Applied Cluster Computing Technologies Group at the Jet Propulsion Laboratory, California Institute of Technology, under contract with NASA.. The key topics included in this slideshow are electronic structure simulation, multi-million atom systems, tight binding, NEMO 3D, Gerhard Klimeck,. Download this presentation absolutely free.

Presentation Transcript

1. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Development of a 3-D tight-binding-based electronic structure simulator for multi-million atom systems NEMO 3-D Gerhard Klimeck, Fabiano Oyafuso, Paul von Allmen Jet Propulsion Laboratory, Caltech Tim Boykin, U Alabama in Huntsville gekco@jpl.nasa.gov, 818-354-2182 http://hpc.jpl.nasa.gov/PEP/gekco This research was carried out by at the Jet Propulsion Laboratory, California Institute of Technology under a contract with the National Aeronautics and Space Administration. This research was carried out by at the Jet Propulsion Laboratory, California Institute of Technology under a contract with the National Aeronautics and Space Administration.

2. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Presentation Outline Introduction / Motivation NASA Motivation, Critical look at the SIA Roadmap. Nano-scale device examples. What is a quantum dot? Modeling agenda. Software Issues Problem size. Parallel computing. Graphical user interfaces. Physics modeling results Strain. Alloy Disorder. Interface Interdiffusion. Conclusion / Outlook

3. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Limit of Commercial Interest Limit of Military Interest NASA radiation and temperature requirements are outside commercial and military interest

4. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Technology Push Toward Fundamental Limitations 1-D feature 5-100 2-D feature Lithography Growth Commercial market pushes computing (FLOPS/weight/power): Enabled by device miniaturization chip size increase Limited by: Costs of fabrication Discrete atoms/electrons 2D Feature Moores Law for Lithography

5. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Technology Push Toward Fundamental Limitations 1-D feature 5-100 2-D feature Lithography Growth Commercial market pushes computing (FLOPS/weight/power): Enabled by device miniaturization chip size increase Limited by: Costs of fabrication Discrete atoms/electrons Additional NASA Requirements : High radiation tolerance Extreme temperature operation- hot/cold 2D Feature Moores Law for Lithography

6. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Technology Push Toward Fundamental Limitations 1-D feature 5-100 2-D feature Lithography Growth Commercial market pushes computing (FLOPS/weight/power): Enabled by device miniaturization chip size increase Limited by: Costs of fabrication Discrete atoms/electrons Additional NASA Requirements : High radiation tolerance Extreme temperature operation- hot/cold

7. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Technology Push Toward Fundamental Limitations 1-D feature 5-100 2-D feature Lithography Growth Commercial market pushes computing (FLOPS/weight/power): Enabled by device miniaturization chip size increase Limited by: Costs of fabrication Discrete atoms/electrons Additional NASA Requirements : High radiation tolerance Extreme temperature operation- hot/cold Quantum Dots Detectors / lasers Memory and logic Quantum dots go beyond the SIA roadmap and enable near and long term NASA applications

8. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT What is a Quantum Dot ? Basic Application Mechanisms Physical Structure: Well conducting domain surrounded in all 3 dim. by low conducting region(s) Domain size on the nanometer scale Electronic structure: Contains a countable number of electrons Electron energy may be quantized -> artificial atoms (coupled QD->molecule)

9. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT What is a Quantum Dot ? Basic Application Mechanisms Physical Structure: Well conducting domain surrounded in all 3 dim. by low conducting region(s) Domain size on the nanometer scale Electronic structure: Contains a countable number of electrons Electron energy may be quantized -> artificial atoms (coupled QD->molecule)

10. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT What is a Quantum Dot ? Basic Application Mechanisms Physical Structure: Well conducting domain surrounded in all 3 dim. by low conducting region(s) Domain size on the nanometer scale Electronic structure: Contains a countable number of electrons Electron energy may be quantized -> artificial atoms (coupled QD->molecule) Photon Absorption Photon Emission Tunneling/Transport Occupancy of states Detectors/ Input Lasers/ Output Logic / Memory

11. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT What is a Quantum Dot ? Basic Application Mechanisms Physical Structure: Well conducting domain surrounded in all 3 dim. by low conducting region(s) Domain size on the nanometer scale Electronic structure: Contains a countable number of electrons Electron energy may be quantized -> artificial atoms (coupled QD->molecule) Photon Absorption Photon Emission Tunneling/Transport Occupancy of states Detectors/ Input Lasers/ Output Logic / Memory Quantum dots are artificial atoms that can be custom designed for a variety of applications

12. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Nanotechnology / Nanoelectronic Example Implementations Self-assembled , InGaAs on GaAs. Pyramidal or dome shaped R.Leon,JPL(1998) JPL Application: IR Sensors Low Dimensional quantum confinement can be achieved in a variety of material systems Nanocrystals: Si implanted in SiO 2 Atwater, Caltech (1996) JPL Applications: Non-volatile Memory Nanotube Arrays , Jimmy Xu, Brown Univ. (1999) JPL Applications: Transducers, filters Molecular Dots Ruthenium-based molecule Ru4(NH3)16(C4H4 N2)410+ proposed by Marya Lieberman, Notre Dame (1999) Computing Appl.

13. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Simulation Characterization Fabrication Need for Nanoelectronic Simulation Problems: Design space is huge Choice of materials, shapes, orientations, dopings, heat anneals Characterizations are incomplete and invasive / destructive Simulation Impact: Aide Design Fast, cost effective. -> Device performance successful for 1-D quantum devices Aide Characterization Non-invasive More accurate -> Structure and doping analysis successful for 1-D quantum devices Modeling, Characterization and Fabrication are inseparable for nano-scale devices

14. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Nano-scale Device Analysis / Synthesis Development of a Bottom-Up Nanoelectronic Modeling Tool Assertions / Problems: Nanoscale structures are built today! The design space is huge: choice of materials, compositions, doping, size, shape Radiation on todays sub-micron devices modifies the electronics on a nanoscale. Approach: Deliver a 3-D atomistic simulation tool Enable analysis of arbitrary crystal structures, particles, atom compositions and bond/structure at arbitrary temperatures and ambient electric and magnetic fields. Collaborators: U. of Alabama, Ames, Purdue, Ohio State, NIST Nanoscale Structures (~20nm) Atomic Orbitals size: 0.2nm

15. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Nano-scale Device Analysis / Synthesis Development of a Bottom-Up Nanoelectronic Modeling Tool Assertions / Problems: Nanoscale structures are built today! The design space is huge: choice of materials, compositions, doping, size, shape Radiation on todays sub-micron devices modifies the electronics on a nanoscale. Approach: Deliver a 3-D atomistic simulation tool Enable analysis of arbitrary crystal structures, particles, atom compositions and bond/structure at arbitrary temperatures and ambient electric and magnetic fields. Collaborators: U. of Alabama, Ames, Purdue, Ohio State, NIST NASA Relevance: Enable new devices needed for NASA missions beyond existing industry roadmap: Water detection -> 2-5 m Lasers and detectors. Avionics -> High density, low power computing. Analyze state-of-the-art devices for non- commercial environments: Europa -> Radiation and low temperature effects. Aging and failure modes. Jovian system -> Magnetic field effects Venus -> high temperature materials: SiGe Impact: Low cost development of revolutionary techn. Modeling will narrow the empirical search space! Nanoscale Structures (~20nm) Atomic Orbitals size: 0.2nm New Devices for Sensing and Computing Analyze Devices : Environment and Failures

16. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Presentation Outline Introduction / Motivation NASA motivation, Critical look at the SIA Roadmap. Nano-scale device examples. What is a quantum dot? Modeling agenda. Software Issues Problem size. Parallel computing. Graphical user interfaces. Physics modeling results Strain. Alloy Disorder. Interface Interdiffusion. Conclusion / Outlook

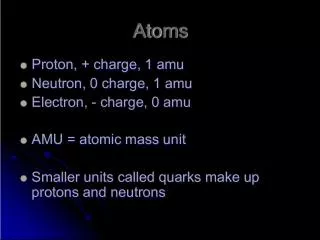

17. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT How big is a realistic problem? How many atoms? Rule of thumb: 43 atoms/nm 3 1 quantum dot: 40x40x15nm 3 -> 1 million atoms 2x2 array of dots: 90x90x15nm 3 -> 5.2 million atoms 70x70x70nm3 cube of Si -> 15 million atoms What is the Basis? 5 orbitals (sp 3 s*), 2 spins -> basis=10 10 orbitals (sp3d5s*), 2 spins -> basis 20 How big are the matrices? Atoms x basis ~ 10 7 x10 7 , sparse 4 neighbors/atom Storage=10 6 x5x(20x20)x16bytes/2=16GB Ga As Al As Non-ideal interface Impurity in quantum dot Basis States A deca-nano device contains >1 Million Atoms

18. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Four Generations of Cluster Experience Hyglac (1997) 16 Pentium Pros 200MHz 128 MB RAM per node 2 GB total 5GB Disc per node 80 GB total 100 Mb/s ethernet crossbar Linux, MPI 3.2GFlops Gordon Bell Prize 1997 Nimrod (1999) 32 Pentium IIIs 450MHz 512 MB RAM per node 16 GB total 8GB Disc per node 256 GB total 100 Mb/s ethernet crossbar Linux, MPI 14.4 GFlops Pluto (2001) 64 Pentium IIIs 800MHz dual CPUs 2 GB RAM per node 64 GB total 10 GB Disc per node 320 GB total 2 Gb/s Myricom crossbar Linux, MPI 51.2 GFlops NewYork (2002) 66 Xserve G4 1GHz 1GB RAM per node 33 GB total 60 GB Disc per node 2 TB total 100 Mb/s ethernet crossbar MAC OS X, MPI 495GFlops

19. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Parallelization of NEMO 3-D Divide Simulation domain into slices. Communication only from one slice to the next (nearest neighbor) Communication overhead across the surfaces of the slices. Limiting operation: sparse matrix-vector multiplication Enable Hamiltonian storage or re-computation on the fly.

20. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Code Parallelization Problem: Need to calculate eigenvalues of a complex matrix of the order of 40 million. => must parallelize code Approach: Evaluate 2 parallel programming paradigms Shared memory (OpenMP) - CPUs can access the same memory. Distributed memory - CPUs exchange data through messages (MPI) - data synchronization performed explicitly by program. Vision : Utilize a designated Beowulf cluster of PCs as a workhorse for these simulations. Each node might have 1-4 shared memory CPUs on one motherboard. Envision a mixed code with outer level MPI parallelism and inner level OpenMP parallelism. This will run on a commercial supercomputer like an SGI Origin 2000 as well as a beowulf. Results: Inner level OpenMP parallelism does not speed up code significantly. Dynamic creation and destruction of threads is too expensive. Decided to abandon the OpenMP implementation and concentrate on the optimization and scaling of the MPI version. Impact: Enabled simulation of 2 million atom systems with 20 orbitals on each atom => matrix of order 40million 30 Lanczos Iterations (sparse matrix-vector multiply) Algorithm scales very nicely on commodity cluster (Beowulf)

21. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Parallel Eigenvalue Solver on a Beowulf (32 node, dual CPU Pentium III, 800MHz, 64GB RAM, Linux)

22. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Parallel Eigenvalue Solver on a Beowulf (32 node, dual CPU Pentium III, 800MHz, 64GB RAM, Linux) Eigenvalue computation ranging from 1/4 to 16 million atoms Hamiltonian storage provides speed-up of >3x If the Hamiltonian is stored the maximum system size is reduced to about 8 million atoms Dual CPU Intel Pentium III has serious memory latency problems. Computation scales almost linear with system size

23. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Comparison of 2 Beowulf Generations 2.5 Years old: 32 CPU, 450 MHz Pentium III, 500MB RAM each CPU 1.5 Years old: 64 CPU (32 nodes), 933 MHz Pentium III, 1GB RAM each node Brand new: 128 CPU (64 nodes), 2.2GHz P4, 1GB RAM each node (RESULTS not in plot) 450MHz and 933MHz results: Plot total CPU cycles in (time x MHz rating) Our problem remains CPU limited, small communication overhead No Problem feeding the memory to the CPUs. Doubling the CPU Frequency, doubled the speed on slower machines only, 2.2GHz does not scale as nice

24. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Comparison of 2 Computing Platforms SGI Origin 2000, 128 CPUs, 300MHz R12000, 2GB RAM per 4 CPUs (4 years old) Beowulf, 64 CPUs, 933MHz Pentium PIII, 1GB per 2 CPUs (1.5 years old) If matrix is recomputed on the fly: Beowulf ~8x faster If matrix is stored: Beowulf is ~ 2.5x faster SGI very fast memory access Cluster of commodity PCs can beat a supercomputer for our problem

25. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Parallelization of Strain Calculation Problem: Serial strain computation: ~43 min. Serial electronic structure calculation: ~ 9 hours Parallel electronic structure computation on 20 CPUs: ~30 min. Solution: Parallelize strain calculation as well Result: Reduce time to 2-5 minutes on a parallel machine. See difference between a fast 2Gbps and a 100Mbps network. Do not see that difference in the electronic structure calculation. Parallel strain computation is more communication dependent than the electronic structure calculation.

26. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Hierarchical Ordering of User Input Fact of Life: Typical simulators have a huge wash list of parameters. Problem: What parameters are really needed? What is the dependence between these parameters? Approach: Parent/child related hierarchical ordering Dynamic window generation. Quantum Potential exchange & correlation? how to go from bias to bias? Specify desired outputs Quantum region: Where are wave-functions? Non-equilibrium region: Where are the reservoirs? Quantum Charge region: Where is the charge quantum mechanically calculated? Semi-classical Potential Specify desired outputs Quantum region: Where are wave-functions? Non-equilibrium region: Where are the reservoirs?

27. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Generic Data Structure I/O potential=Hartree hbarovertau=0.0066 Ec=FALSE < start=45, end=69 > Translator Data Structure PotType potential real hbarovertau Boolean Ec RangeStruct NonEq Graphical User Interface Read Create File/Batch User Interface Read Create Dynamic GUI Design. data structure member descriptor -> I/O for GUI or files Theorist Software Engineer Flexible software design enables use in various different simulators

28. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT XML Based Generic Data Structure I/O potential=Hartree hbarovertau=0.0066 Ec=FALSE < start=45, end=69 > Translator XML Structure PotType potential real hbarovertau Boolean Ec RangeStruct NonEq Graphical User Interface Read Create File/Batch User Interface Read Create Theorist Read Create C++ data structures

29. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Presentation Outline Introduction / Motivation NASA Motivation, Critical look at the SIA Roadmap. Nano-scale device examples. What is a quantum dot? Modeling agenda. Software Issues Problem size. Parallel computing. Graphical user interfaces. Physics modeling results Strain. Alloy Disorder. Interface Interdiffusion. Conclusion / Outlook

30. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Ga In As Mechanical Strain Calculations Mechanics Problem: Minimize elastic strain (Keating) Problem: Self-assembly dot formation due to strain Small mechanical strain (5% bond length) -> dramatic effects on electronic structures Approach: Nanomechanical strain calculation Nanoelectronic strain calculation. Pyramidal InAs Dot Simulation Orbital overlap changes bandgaps and masses Base: 7nmx7nm Height: 3nm Embedded in GaAs 1.02eV E eh 1.33eV E eh Unstrained Dot Strained Dot Results: Implemented a mechanical strain model. Implemented atomistic bandstructure model that comprehends strain. Impact: Can simulate realistic quantum dots. Can estimate optical transition energies. R st i j Equilibrium Strained Electronics Problem: Effect of overlap changes Small strain has dramatic effects on the electronic structure.

31. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Alloy Disorder in Quantum Dots In 0.6 Ga 0.4 As Lens Shaped Dot Problem: Cations are randomly distributed in alloy dots. Does alloy disorder limit electronic structure uniformity for dot ensembles? Approach: Simulate a statistical ensemble of alloyed dots. Requires atomistic simulation tool. In and Ga atoms are randomly distributed Inhomogeneous Broadening? (Diameter=30nm, Height=5nm, GaAs embedded) Results: Simulated 50 dots with random cation distributions. Inhomogeneous broadening factor of 9.4meV due to alloy disorder. Impact: Fundamental uniformity limit for ensemble of alloy-based quantum dots. E eh =1.05eV =0.1-5meV Simulation of Alloy Dot Ensemble Measured =34.6 meV (R. Leon, PRB, 58 , R4262) 5meV Theoretical Lower Limit Alloy disorder presents a theoretical lower limit on optical linewidths

32. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Conclusions / Future Vision Quantum Dots End of SIA Roadmap (Ba,Sr)TiO 3 TiO 2 Dopant Fluctuations in Ultra-scaled CMOS Electron Transport in Exotic Dielectrics Transport in Molecules Carbon Nanotubes DNA Grading Abrupt Graded Atomistic Simulation Parallelization (16 million atoms), Graded junctions, alloy disorder, strain Made significant progress towards a general atomistic simulation tool Envision this tool to have impact on quantum dots, end of SIA roadmap issues, and molectronics. Modeling is an Integral Part of Nano-Science We are looking for motivated people!!! CS, EE, Phys At all degree levels!!!!

33. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Atomistic Grading Simulation Problem: Quantum dot interfaces may not be sharp. There may be cation redistribution around the interface => grading of the concentration. How does the interfacial grading affect the electronic structure? Approach: Simulate quantum dot atomistically with graded interfaces as a function of interdiffusion length. Ga In As Results: More Ga in the quantum dot raises the energy of the transition energies. Less Ga in the barriers softens the barriers, reduces the binding of the excited states to the quantum dot and reduces E=E 2 -E 1 . Impact: Verify experimentally suggested interdiffusion process may be responsible for blue shift and reduction in DE. Pyramidal InAs in GaAs, Diameter=10nm, Height=4.2nm 5 samples per data point Cartoon Visualization of Interdiffusion Slice through 2 Qdots with thickness of 3 atoms - with and without interdiffusion Interdiffusion widens the bandgap => blueshift

34. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Cassini Mars Pathfinder Pluto/Kuiper Express Microspacecraft 1000 kg 100 kg 10 kg Past Present Future Spacecraft Mass NEAR Solar Probe Stardust Europa Orbiter Lewis Clark Mars 98 Lander/Orbiter Progressive Spacecraft Miniaturization Low weight, low power and high efficiency Have a special meaning to NASA

35. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Motivation Our enthusiasm for Nano- technology stems from its potential value in addressing Deep Space technology needs: Autonomous navigation and maneuvering, Miniature in-situ sensors, Radiation and temperature tolerant electronics. Nano-technology will provide essential computing and sensing capabilities. Distributed Sensors Penetrators Integrated Inflatable Sailcraft Miniaturized- Rovers Minaturized- Spacecraft Hydrobot Atmospheric Probes Landing on Small Bodies

36. Gerhard Klimeck Applied Cluster Computing Technologies Group GROUP ACT Parallel Eigenvalue Solver on a Beowulf (32 node, dual CPU Pentium III, 800MHz, Linux) Eigenvalue computation ranging from 1/4 to 16 million atoms Problems are too big for a single CPU (memory requirements) sp3s* basis set Matrix sizes up to 1.6 10 8 x1.6 10 8 Recompute Hamiltonian matrix on the fly. Measure time for 30 Lanczos iterations, Full problem 1000-5000 iterations Solver behaves almost linear in system size!!!