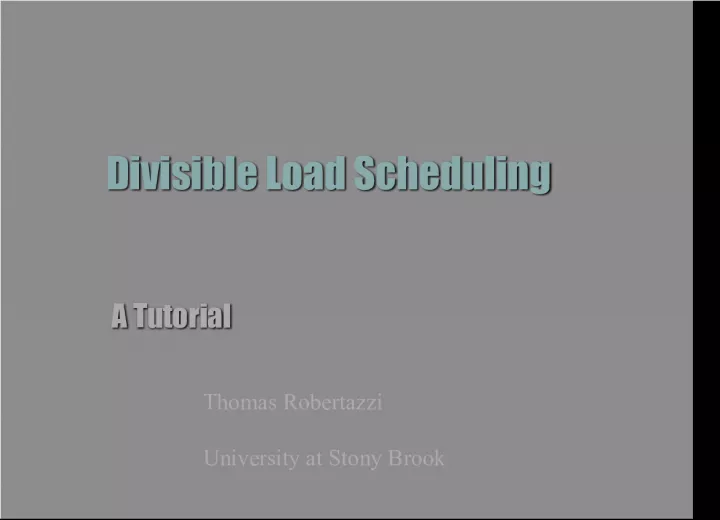

Divisible Load Scheduling: A Tutorial on Computational Load Partitioning

Learn about divisible load scheduling, which allows for computational loads to be partitioned and distributed across multiple processors and links in a network. This tutorial by Thomas Robertazzi from the University at Stony Brook explains the basics of this technique and its applications.

- Uploaded on | 1 Views

-

madden

madden

About Divisible Load Scheduling: A Tutorial on Computational Load Partitioning

PowerPoint presentation about 'Divisible Load Scheduling: A Tutorial on Computational Load Partitioning'. This presentation describes the topic on Learn about divisible load scheduling, which allows for computational loads to be partitioned and distributed across multiple processors and links in a network. This tutorial by Thomas Robertazzi from the University at Stony Brook explains the basics of this technique and its applications.. The key topics included in this slideshow are Divisible load, scheduling, computation, partitioning, network,. Download this presentation absolutely free.

Presentation Transcript

1. Divisible Load Scheduling Divisible Load Scheduling A Tutorial A Tutorial Thomas Robertazzi University at Stony Brook

2. What is a Divisible Load? What is a Divisible Load? b A computational & networkable load that is arbitrarily partitionable (divisible) amongst processors and links. b A computational & networkable load that is arbitrarily partitionable (divisible) amongst processors and links. b There are no precedence relations. b There are no precedence relations.

3. Simple Application Example Simple Application Example b Problem: Sum 1000 trillion numbers b Problem: Sum 1000 trillion numbers b Approach: Partition the numbers among 100 processors b Approach: Partition the numbers among 100 processors b But how? b But how?

4. Simple Application Example Simple Application Example b To optimize solution time (maximize speedup) one needs to take into account heterogeneous link and processor speeds, computation and communication intensities, interconnection topology and scheduling policy. b To optimize solution time (maximize speedup) one needs to take into account heterogeneous link and processor speeds, computation and communication intensities, interconnection topology and scheduling policy. b Divisible Load Scheduling Theory Can Do This! b Divisible Load Scheduling Theory Can Do This!

5. Applications (Generic) Applications (Generic) b Grid Computing/Meta-computing b Grid Computing/Meta-computing b Data Intensive Computing b Data Intensive Computing b Sensor Processing b Sensor Processing b Image Processing b Image Processing b Scientific/Engineering Computing b Scientific/Engineering Computing b Financial Computing b Financial Computing

6. Applications (Specific) Applications (Specific) b Pattern Searching b Pattern Searching b Database Computation b Database Computation b Matrix-Vector Computation b Matrix-Vector Computation b E&M Field Calculation (CAD) b E&M Field Calculation (CAD) b Edge Detection b Edge Detection

7. DLT Modeling Advantages DLT Modeling Advantages b Linear and Deterministic Modeling b Linear and Deterministic Modeling b Tractable Recursive/Linear Equation Solution b Tractable Recursive/Linear Equation Solution b Schematic Language b Schematic Language b Equivalent Elements b Equivalent Elements b Many Applications b Many Applications

8. Interconnection Topologies Interconnection Topologies b Linear Daisy Chain b Linear Daisy Chain b Bus b Bus b Single Level and Multilevel Trees b Single Level and Multilevel Trees b Mesh b Mesh b Hypercube b Hypercube

9. Directions: Scalability Directions: Scalability 1 3 2 1 1 1 Sequential Distribution (Saturation) Simultaneous Distribution (Scalable) Hung & Robertazzi Cheng & Robertazzi

10. An Example An Example b Model Specifications: b Model Specifications: A star network( single level tree network), and multi-level tree. A star network( single level tree network), and multi-level tree. Computation and transmission time is a linear function of the size of load. Computation and transmission time is a linear function of the size of load. Level to Level: Store and Forward Switching Level to Level: Store and Forward Switching Same Level: Concurrent Load Distribution. Same Level: Concurrent Load Distribution.

11. b Children without Front End: b Children without Front End: b After receiving the assigned data, each child proceeds to process the data. b After receiving the assigned data, each child proceeds to process the data.

12. b Timing Diagram (single level tree) : b Timing Diagram (single level tree) : b Children without Front End b Children without Front End

13. m+1 unknows vs. m+1 Eqs. m+1 unknows vs. m+1 Eqs. b Recursive equations: b Recursive equations: b Normalization equation: b Normalization equation:

14. b Distribution Solution: b Distribution Solution:

15. b The load distribution solution is similar to the solution of the state-dependent M/M/1 queuing system. b The load distribution solution is similar to the solution of the state-dependent M/M/1 queuing system.

16. Similarities to Queueing Theory Similarities to Queueing Theory b Linear model and tractable solutions b Linear model and tractable solutions b Schematic Language b Schematic Language b Equivalent Elelements b Equivalent Elelements b Infinite Size Networks b Infinite Size Networks

17. b Speedup Analysis b Speedup Analysis

18. b Speedup Analysis (continued) b Speedup Analysis (continued)

19. b Tree Network b Tree Network b (Children without Front Ends) b (Children without Front Ends)

20. Collapsing single level trees Collapsing single level trees

21. Bandwidth of Fat Tree Bandwidth of Fat Tree b Definition: The bandwidth of level j in a fat tree can be defined as p j-1 z. b Definition: The bandwidth of level j in a fat tree can be defined as p j-1 z.

22. Directions: Sequencing and Installments Directions: Sequencing and Installments b Daisy Chain Surprise b Daisy Chain Surprise b Efficiency Rule b Efficiency Rule Ghose, Mani & Bharadwaj

23. Directions: Sequencing and Installments Directions: Sequencing and Installments b Multi-installment for Sequential Distribution b Multi-installment for Sequential Distribution 1 2 3 4 5 6 Ghose, Mani & Bharadwaj

24. Directions: Sequencing and Installments Directions: Sequencing and Installments Diminishing returns in using multi-installment distribution. Ghose, Mani & Bharadwaj

25. Directions: Sequencing and Installments Directions: Sequencing and Installments Drozdowski

26. Directions: Time Varying Modeling Directions: Time Varying Modeling Can be solved with integral calculus. Sohn & Robertazzi

27. Directions: Monetary Cost Optimization Directions: Monetary Cost Optimization Min C Total n c n w n T cp Min C Total n c n w n T cp n=1 N Bus Processors Optimal Sequential Distribution if: c n-1 w n-1 less than c n w n for all n Sohn, Luryi & Robertazzi

28. Directions: Monetary Cost Optimization Directions: Monetary Cost Optimization b 2 US Patents: b 2 US Patents: Patent 5,889,989 (1999): Processor Cost Patent 5,889,989 (1999): Processor Cost Patent 6,370,560 (2001): Processor and Patent 6,370,560 (2001): Processor and Link Cost Link Cost Enabling technology for an open e-commerce Enabling technology for an open e-commerce market in leased proprietary computing. market in leased proprietary computing. Sohn, Charcranoon, Luryi & Robertazzi

29. Directions: Database Modeling Directions: Database Modeling Expected time to find multiple records in flat file database Ko & Robertazzi

30. Directions: Realism Directions: Realism Finite Buffers (Bharadwaj) Finite Buffers (Bharadwaj) Job Granularity (Bharadwaj) Job Granularity (Bharadwaj) Queueing Model Integration Queueing Model Integration

31. Directions: Experimental Work Directions: Experimental Work Database Join (Drozdowski)

32. Directions: Future Research Directions: Future Research b Operating Systems: b Operating Systems: Incorporate divisible load scheduling Incorporate divisible load scheduling into (distributed) operating systems into (distributed) operating systems b Measurement Process Modeling: b Measurement Process Modeling: Integrate measurement process Integrate measurement process modeling into divisible scheduling modeling into divisible scheduling

33. Directions: Future Research Directions: Future Research b Pipelining (Dutot) b Pipelining (Dutot) Concept: Distribute load to Concept: Distribute load to further processors first for further processors first for speedup improvement speedup improvement Improvement reported for daisy chains Improvement reported for daisy chains

34. Directions: Future Research Directions: Future Research b System Parameter Estimation (Ghose): b System Parameter Estimation (Ghose): Concept: Send small probing loads across Concept: Send small probing loads across links and to processors to estimate links and to processors to estimate available effort available effort Challenge: Rapid change in link & processor Challenge: Rapid change in link & processor state state

35. DLT has a Good Future DLT has a Good Future b Many Applications including b Many Applications including wireless sensor networks wireless sensor networks b Tractable (Modeling & Computation) b Tractable (Modeling & Computation) b Rich Theoretical Basis b Rich Theoretical Basis

36. Thank you! Thank you! Questions??? Questions???