Real Time Semi-supervised Source Separation for Online Speech Denoising and Video Chatting

This presentation discusses an online PLCA algorithm developed for real time semi-supervised source separation to denoise speech in teleconferences and improve the quality of video chatting. The algorithm can separate speech from noise sources like computer keyboards in real time.

- Uploaded on | 0 Views

-

ramilo

ramilo

About Real Time Semi-supervised Source Separation for Online Speech Denoising and Video Chatting

PowerPoint presentation about 'Real Time Semi-supervised Source Separation for Online Speech Denoising and Video Chatting'. This presentation describes the topic on This presentation discusses an online PLCA algorithm developed for real time semi-supervised source separation to denoise speech in teleconferences and improve the quality of video chatting. The algorithm can separate speech from noise sources like computer keyboards in real time.. The key topics included in this slideshow are PLCA, source separation, real time, speech denoising, online algorithm, video chatting,. Download this presentation absolutely free.

Presentation Transcript

1. Online PLCA for Real-Time Semi-supervised Source Separation Zhiyao Duan , Gautham J. Mysore , Paris Smaragdis 1. EECS Department, Northwestern University 2. Advanced Technology Labs, Adobe Systems Inc 3. University of Illinois at Urbana-Champaign Presentation at LVA/ICA on March 14 , 2012 1 1 2 2,3

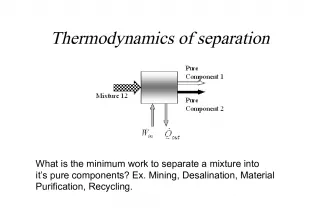

2. Speech denoising in teleconference Source 1: noise (e.g. computer keyboard) Source 2: speech Online separation algorithms are needed Real-time Source Separation is Important 2 Video Chatting

3. Spectrogram Decomposition Probabilistic Latent Component Analysis (PLCA) Nonnegative Matrix Factorization (NMF) Dictionary of basis spectra Activation weights of basis spectra Minimize reconst. error dict., weights Observed spectra Reconstructed spectra

4. Supervised Separation: Easy Online 4 Train source dictionaries: Trained dict. for Source 1 Decompose sound mixture: Reconstruct Source 2: Activation weights Trained dict. for Source 2 Source dict.s

5. Semi -supervised Separation: Offline 5 Train source dictionaries: Trained dict. for Source 1 Trained dict. for Source 2 Decompose sound mixture: Reconstruct Source 2: Activation weights Source dict.s No Training Data!

6. Problem Spectrogram decomposition-based semi- supervised separation is offline Not applicable for Real-time separation Very long recordings Can we make it online? 6

7. The First Attempt Objective: decompose the current mixture frame well Do semi-supervised source separation on the current mixture frame Mission Impossible! Many more unknowns than equations Learned S2s dictionary will be almost the same as the mixture frame (overfitting) Need to constrain S2s dictionary! 7 S1 dict. (given) S2 dict. S2 dict. Separated S2

8. Proposed Online Semi-supervised PLCA Decompose the current mixture frame and some previous mixture frames (called running buffer ) S1 dict. (trained) S2 dict. S2 weights Buffer frames (constraint) (weights of previous frames are already learned) S1 weights Current frame (objective) Weights of current frame 8 Buffer frames reconst. error Current frame reconst. error Tradeoff Buffer size S2 dict., Weights of current frame

9. Update S2s Dictionary Frame t Frame t+1 9 Warm initialization

10. Buffer Frames Not too many or too old Otherwise algorithm will be slow, and constraints might be too strong We used 60 most recent, qualified frames (about 1 second long) Qualified: must contain S2s signals They are used to constrain S2s dictionary How to judge if a mixture frame contains S2 or not? 10

11. Which Mixture Frame Contains S2? Assume: Mixture = S1 + S2 Decompose the mixture frame only using S1s dictionary If reconstruction error is large Probably contains S2 Semi-supervised separation using S1s dict. (the proposed algorithm) This frame goes to the buffer If reconstruction error is small Probably no S2 Supervised separation using S1s dict. and S2s up-to-date dict. This frame does not go to buffer 11 S1 dict. (trained) S1 dict. (trained) S2 dict. (up-to-date)

12. Advantages The learned S2s dictionary avoids overfitting the current mixture frame Compared to offline PLCA, the learned S2s dictionary is learned from the current frame and buffer frames Smaller (more compact) More localized Constantly being updated Convergence is fast at each frame Since from Frame t to t+1, the S2s dictionary has a warm initialization 12

13. Experiments Data Set Speech denoising S1 = noise: train noise dictionary beforehand S2 = speech: update speech dictionary on the fly 10 kinds of non-stationary noise Birds, casino, cicadas, computer keyboard, eating chips, frogs, jungle, machine guns, motorcycles and ocean 6 speakers (3 male and 3 female), from [1] 5 SNRs (-10, -5, 0, 5, 10 dB) All combinations generate our noisy speech dataset About 300 * 15 seconds = 1.25 hours [1] Loizou, P. (2007), Speech Enhancement: Theory and Practice , CRC Press, Boca Raton: FL. 13

14. Experiments Results (1) 14 Offline PLCA (20 speech bases) Proposed online PLCA (7 speech bases) Online NMF (O-IS-NMF), [Lefvre et al, 2011] not designed for separation; designed for learning dictionaries

15. Experimental Results (2) Noise dict. size Tradeoff: constraint vs. objective

16. Speech + computer keyboard noise Speech + bird noise Examples Noisy speech 16 SNR=10dB Noisy speech Offline PLCA Online PLCA SDR (dB) 10.0 9.8 12.9 SIR (dB) 10.0 26.0 20.7 SAR (dB) 75.4 9.9 13.7 SNR=5dB Noisy speech Offline PLCA Online PLCA SDR (dB) 5.0 11.3 9.9 SIR (dB) 5.0 27.8 23.5 SAR (dB) 77.2 11.4 10.1

17. Conclusions Proposed an online-PLCA algorithm for semi- supervised source separation Algorithmic properties Learns a smaller, more localized dictionary Fast convergence in each frame Achieved almost as good results as offline PLCA, and significantly better than an existing online NMF algorithm 17